|

|

Archive for the ‘Caching’ Category

Sunday, April 28th, 2013

With all the talk of CQRS, the area that doesn’t get enough treatment (in my opinion) is that of queries. Many are already beginning to understand the importance of task-based UIs and how that aligns to the underlying commands being sent, validated, and processed in the system as well as the benefits of messaging-centric infrastructure (like NServiceBus) for handling those commands reliably. When it comes to queries, though, it isn’t nearly as well understood what it means for a query to be “task based”. With all the talk of CQRS, the area that doesn’t get enough treatment (in my opinion) is that of queries. Many are already beginning to understand the importance of task-based UIs and how that aligns to the underlying commands being sent, validated, and processed in the system as well as the benefits of messaging-centric infrastructure (like NServiceBus) for handling those commands reliably. When it comes to queries, though, it isn’t nearly as well understood what it means for a query to be “task based”.

Starting with CRUD

Let’s start with a traditional CRUD application and work our way out from there.

In these environments, we often see users asking us to build “excel-like” screens that allow them to view a set of data as well as sort, filter, and group that data along various axes. While we might not get this requirement right away, after some time users begin to ask us to allow them to “save” a certain “query” that they have set up, providing it some kind of name.

That, right there, is a task-based query and it is the beginning of deeper domain insight.

Pattern matching

Any time a user is repeatedly running the same query (this can be once a day or some other unit of time) there is some scenario that the business is trying to identify and is using that user as a pattern-matching engine to see if the data indicates that that scenario has occurred.

It’s quite common for us to get a requirement to add some field (often a boolean or enum) to an entity which defaults to some value and then see that same field used in filtering other queries. These measures are sometimes instituted as a temporary stop-gap while a larger feature is being implemented, though (as the saying goes) there is nothing more permanent than a temporary solution.

Where we developers go wrong

The thing is, many developers don’t notice these sorts of things happening because we don’t actually look at the kinds of queries users are running.

One excellent technique to better understand a domain is to sit down with your users while they’re working and ask them, “what made you run that query just now?”, “why that specific set of filters?”.

What I’ve noticed over the years is that our users find very creative ways to achieve their business objectives despite the limitations of the system that they’re working with. We developers ultimately see these as requirements, but they are better interpreted as workarounds.

I’ll talk some more about how a software development organization should deal with these workarounds in a future post, but I want to focus back in on the queries for now.

Oh, and don’t get me started on caching or NoSQL, not that I think that those tools don’t provide value – they do, but they’re only relevant once you know which business problem you’re solving and why.

Not all queries are created equal

Even before bringing up the questions I described in the previous section, any time you get query-centric requirements the first question to ask is “how often will the user be running this specific query?”.

If the answer is that the specific query will be run periodically (every day, week, etc), then drill deeper to see what pattern the user will be looking for in the data. If the person you’re talking to doesn’t know to answer that question, then go find someone who does. Every periodic query I’ve seen has some pattern behind it – and in my conversations with thousands of other developers over the years, I’ve seen that this is not just my personal experience.

But there is a case where a query does get run repeatedly without there being a pattern behind it.

I know this sounds like I’m contradicting myself, but the distinction is the word “specific” that I emphasized above.

There are certain users who behave very differently from other users – these users are often doing what I call research, i.e. the “I don’t know what I’m looking for but I’ll know it when I see it” people.

These researchers tend to repeatedly query the data in the system however they tend to run different queries all the time. This is the reason why traditional data warehouse type solutions don’t tend to work well for them. Data warehouses are optimized for running specific queries repeatedly.

Keeping the Single-Responsibility Principle in mind – we should not try to create a single query mechanism that will address these two very different and independently evolving needs.

And now on to Search

Search is a feature that is needed in many systems and whose complexity is greatly underestimated.

While the developer community has taken some decent strides in understanding that search needs to be treated differently from other queries, the common Lucene/Solr solutions that are applied are often overwhelmed by the size of the data set on which the business operates.

The problem is compounded by our user population being spoiled by Google – that simple little text box and voila, exactly what you’re looking for magically appears instantaneously. They don’t understand (or care) how much engineering effort went into making that “just work”.

Lucene and Solr work well when your data set isn’t too large, and then they become pretty useless as the quality of their results degrades. The thing is that many of us in IT tend to work on projects where we have an unrealistically small data set that we use to test the system and, at these volumes, it looks like our solutions work great. But if you have 20 million customers, do you think a full text search on “Smith” is going to find just the right one?

Larger data sets require a relevance engine – something that feeds off of what users do AFTER the query to influence the results of future queries. Did the user page to the next screen? That needs to be fed back in. Did they click on one of the results? That needs to be fed back in too. Did they go back to the search and do another similar search right after looking at a result – that should possibly undo the previous feedback.

And that’s just relevance for beginners.

You know what makes Google, you know, Google? It’s that they have this absolutely massive data set of what users do after the query that informs which results they return when. You probably don’t have that. That and search is/was their main business for many years – I’m betting that it’s not your main business.

You should discuss this with your stakeholders the next time they ask for search functionality in your system.

In closing

I know that the common CQRS talking points tell you to keep your queries simple, but that doesn’t mean that simple is easy.

It takes a fair bit of domain understanding to figure out what the queries in the system are supposed to be – what tasks users are trying to achieve through these queries. And even when you do reach this understanding, convincing various business stakeholders to change the design of the UI to reflect these insights is far from easy.

It often seems like the reasonable solution to give our users everything, to not limit them in any way, and then they’ll be able to do anything. What ends up happening is that our users end up drowning in a sea of data, unable to see the forest for the trees, ultimately resulting in the company not noticing important trends quickly enough (or at all) and therefore making poor business decisions.

Even if your company doesn’t believe itself to be in “Big Data” territory, I’d suggest talking with the people on the “front lines” just in case. Many of them will report feeling overwhelmed by the quantity of stuff (to use the correct scientific term) they need to deal with.

It’s not about Lucene, Solr, OData, SSRS, or any other technology.

It’s on you. Go get ’em.

Posted in Architecture, Caching, CQRS, Data Access, NOSQL, Usability | 8 Comments »

Tuesday, August 28th, 2012

Occasionally I’ll get questions from people who have been going down the CQRS path about why I’m so against data duplication. Aren’t the performance benefits of a denormalized view model justified, they ask. This is even more pronounced in geographically distributed systems where the “round-trip” may involve going outside your datacenter over a relatively slow link to another site. Occasionally I’ll get questions from people who have been going down the CQRS path about why I’m so against data duplication. Aren’t the performance benefits of a denormalized view model justified, they ask. This is even more pronounced in geographically distributed systems where the “round-trip” may involve going outside your datacenter over a relatively slow link to another site.

CQRS

As his been said several times before by many others, it’s not the denormalized view model that defines CQRS.

One of the things that sometimes surprising people after going through my course is that in most cases you don’t need a denormalized view model, or at least, not the kind you think. Yes, that’s right: MOST cases.

But I don’t want to get too deep into the CQRS thing in this post – that can wait.

SOA

The big thing I’m against is raw business data being duplicated between services.

Data that can be expected to be accessible in multiple services includes things like identifiers, status information, and date-times. These date-times are used to anchor the status changes in time so that our system will behave correctly even if data/messages are processed out of order. Not all status information necessarily needs to be anchored in time explicitly – sometimes this can be implicit to the context of a given flow through the system.

For example, the Amazon.com checkout workflow.

In that flow, if you provide a shipping address that is in the US, you are presented with one set of options for shipping speed, whereas an international address will lead you to a different set of options.

Assuming that the address information of the customer and the shipping speed options are in different services, we need to propagate the status InternationalAddress(true/false) between these services in that same flow. In this case, there isn’t a need to explicitly anchor that status in time.

But what’s so bad about duplication of data between services?

The danger is that functionality ultimately follows raw business data.

You start with something small like having product prices in the catalog service, the order service, and the invoice service. Then, when you get requirements around supporting multiple currencies, you now need to implement that logic in multiple places, or create a shared library that all the services depend on.

These dependencies creep up on you slowly, tying your shoelaces together, gradually slowing down the pace of development, undermining the stability of your codebase where changes to one part of the system break other parts. It’s a slow death by a thousand cuts, and as a result nobody is exactly sure what big decision we made that caused everything to go so bad.

That’s the thing, it wasn’t viewed as a “big decision” but rather as just one “pragmatic choice” for that specific case. The first one excuses the second, which paves the way for third, and from that point on, it’s a “pattern” – how we do things around here; the proverbial slippery slope.

So what’s with the word “Replication” in the title of this post?

While data duplication between services is very dangerous, replication of business data WITHIN a service is perfectly alright.

Let’s get back into multi-site scenarios, like a retail chain that has a headquarters (HQ) and many stores. Prices are pushed out from the HQ and orders are pushed back from the stores according to some schedule.

We know that we can’t guarantee a perfect connection between all stores and the HQ at all times, therefore we copy the prices published from the HQ and store them locally in the store. Also, since we want to perform top-level analytics on the orders made at the various stores, that would be best done by having all of those orders copied locally at the HQ as well.

We should not view this movement of data from one physical location to another as duplication, but rather as replication done for performance reasons. If there were some magical always-on zero-latency network that existed, we wouldn’t need to do any of this replication.

And that’s just the thing – logical boundaries should not be impacted by these types of physical infrastructure choices (generally speaking). Since services are aligned with logical boundaries, we should expect to see them cross physical boundaries – this includes SYSTEM boundaries (since a system is really nothing more than a unit of deployment).

I know that you might be reading that and thinking “What!?” but there isn’t enough time to get into this in any more depth here. You can read some of my previous posts on the topic of SOA for more info here.

Cross-site integration without replication

There are some domains where sensitive data cannot be allowed to “rest” just anywhere. Let’s look at a healthcare environment where we’re integrating data from multiple hospitals and care providers. While all of these partners are interested in working together to make sure that patients get the best care, which means that they need to share their data with each other, they don’t want any of THEIR data to remain at any partner sites afterwards (and are quite adamant about this).

In these cases, the decision was made that performance is less important than data ownership. Personally, I don’t agree with this mindset. The fact that data is “at rest” in a location as opposed to “in flight” does not change ownership. It could be stored in an encrypted manner so that only a certain application could use it, resulting in the same overall effect, but this is an argument that I’ve never won.

People (as physical beings) put a great deal of emphasis on the physical locations of things. It’s understandable but quite counterproductive when dealing with the more abstract domain of software.

In closing

By virtue of the fact that we don’t duplicate raw business data between services, that means that the regular data structures inside a service already look very different from what they would have looked like in a traditional layered architecture with an ORM-persisted entity model.

In fact, you probably wouldn’t see very many relationships between entities at all.

Going beyond that, you probably wouldn’t see the same entities you had before. An Order wouldn’t exist the way you expect; addresses (billing and shipping) would be stored (indexed by OrderID) in one service whereas the shipping speed (also indexed by OrderId) would be in another, and the prices may well be in yet another.

It is in this manner that data does not end up being duplicated between services, but rather is composed by many services whether that is in the UI of one system, the print-outs down by a second system, or in the integration with 3rd parties done by a third system.

If performance needs to be improved, look at having these services replicate their data from one physical system to another – in-memory caching is one way of doing this, denormalized view models might be though of as another (until you realize there isn’t very much normalization within a service to begin with).

And a word from our sponsor 🙂

For those of you on “rewrite that big-ball-of-mud” projects looking to use these principles, I strongly suggest coming on one of my courses. The next one is in San Francisco and I’ve just opened up the registration for Miami.

For those of you on the other side of the Atlantic, the next courses will be in Stockholm in October and in London this December.

The schedule for next year is also coming together and it will include South Africa and Australia too.

Anyway, here’s what one attendee had to say after taking the course earlier this month:

I wanted to thank you for the excellent workshop in Toronto last week. I spent the better part of the weekend reflecting over what was presented, the insights we learned through the group exercises, and how my preconceptions of SOA have changed. By the end of the course, all the tidbits of (usually) rather ambiguous information that I’ve collected from various blogs, books, and other sources, finally coalesced into something more intelligible – one big A-HA moment if you will. Overall, I found the content of the workshop to be incredibly enlightening and it left me feeling invigorated and excited to learn more.

– Joel from Canada

Hope you’ll be able to make it.

If travel is out of the question for you, you can also look at get a recording of the course here.

One final thing

If your employer won’t foot the bill for these, please get in touch with me.

I wouldn’t want you not to be able to come just because you’re paying out of pocket.

There are very substantial discounts available.

Contact me.

Posted in Architecture, Autonomous Services, Caching, CQRS, SOA, Training | 32 Comments »

Sunday, November 1st, 2009

One question that I get asked about quite a bit with relation to messaging is about search. Isn’t search inherently request/response? Doesn’t it have to return immediately? Wouldn’t messaging in this case hurt our performance?

While I tend to put search in the query camp in the when keeping the responsibility of commands and queries separate, and often recommend that those queries be done without messaging, there are certain types of search where messaging does make sense.

In this post, I’ll describe certain properties of the problem domain that make messaging a good candidate for a solution.

Searching is besides the point – Finding is what it’s all about

Remember that search is only a means to an end in the eyes of the user – they want to find something. One of the difficulties we users have is expressing what we want to find in ways that machines can understand.

In thinking about how we build systems to interact with users, we need to take this fuzziness into account. The more data that we have, the less homogeneous it is, the harder this problem becomes.

When talking about speed, while users are sensitive to the technical interactivity, the thing that matters most is the total time it takes for them to find what they want. If the result of each search screen pops up in 100ms, but the user hasn’t found what they’re looking for after clicking through 20 screens, the search function is ultimately broken.

Notice that the finding process isn’t perceived as “immediate” in the eyes of the user – the evaluation they do in their heads of the search results is as much a part of finding as the search itself.

Also, if the user needs to refine their search terms in order to find what they want, we’re now talking about a multi-request/multi-response process. There is nothing in the problem domain which indicates that finding is inherently request/response.

Relationships in the data

When bringing back data as the result of a search, what we’re saying is that there is a property which is the same across the result elements. But there may be more than one such property. For example, if we search for “blue” on Google Images, we get back pictures of the sky, birds, flowers, and more. Obvious so far – but let’s exploit the obvious a bit.

When the user sees that too many irrelevant results come back, they’ll want to refine their search. One way they can do that is to perform a new search and put in a more specific search phrase – like “blue sky”. Another way is for them to indicate this is by selecting an image and saying “not like this” or “more of these”. Then we can use the additional properties we know about those images to further refine the result group – either adding more images of one kind, or removing images of another.

Here’s something else that’s obvious:

Users often click or change their search before the entire result screen is shown.

It’s beginning to sound like users are already interacting with search in an asynchronous manner. What if we actually designed a system that played to that kind of interaction model?

Data-space partitioning

Once we accept the fact that the user is willing to have more results appear in increments, we can talk about having multiple servers processing the search in parallel. For large data spaces, it is unlikely for us to be able to store all the required meta data for search on one server anyway.

All we really need is a way to index these independent result-sets so that the user can access them. This can be done simply by allocating a GUID/UUID for the search request and storing the result-sets along with that ID.

Browser interaction

When the browser calls a server with the search request the first time, that server allocates an ID to that request, returns a URL containing that ID to the browser, and publishes an event containing the search term and the ID. Each of our processing nodes is subscribed to that event, performs the search on its part of the data-space, and writes its results (likely to a distributed cache) along with that ID.

The browser polls the above URL, which queries the cache (give me everything with this ID), and the browser sees which resources have been added since the last time it polled, and shows them to the user.

If the user clicks “more of these”, that initiates a new search request to the server, which follows the same pattern as before, just that the system is able to pull more relevant information. When implementing “not like this”, this performs a similar search but, instead of adding to the list of items shown, we’re removing items from the list shown based on the response from the server.

In this kind of user-system interaction model, having the user page through the result set doesn’t make very much sense as we’re not capturing the intent of the user, which is “you’re not showing me what I want”. By making it easy for the user to fine tune the result set, we get them closer to finding what they want. By performing work in parallel in a non-blocking manner on smaller sets of data, we greatly decrease the “time to first byte” as well as the time when the user can refine their search.

But Google doesn’t work like that

I know that this isn’t like the search UI we’ve all grown used to.

But then again, the search that you’re providing your users is more specific – not just pages on the web. If you’re a retailer allowing your users to search for a gift, this kind of “more like this, less like that” model is how users would interact with a real sales-person when shopping in a store. Why not model your system after the ways that people behave in the real world?

In closing

If we were to try to make use of messaging underneath “classical” search interaction models, it probably wouldn’t have been the greatest fit. If all we’re doing at a logical level is blocking RPC, then messaging would probably make the system slower. The real power that you get from messaging is being able to technically do things in parallel – that’s how it makes things faster. If you can find ways to see that parallelism in your problem domain, not only will messaging make sense technically – it will really be the only way to build that kind of system.

Learning how to disconnect from seeing the world through the RPC-tinted glasses of our technical past takes time. Focusing on the problem domain, seeing it from the user’s perspective without any technical constraints – that’s the key to finding elegant solutions. More often than not, you’ll see that the real world is non-blocking and parallel, and then you’ll be able to make the best use of messaging and other related patterns.

What are your thought? Post a comment and let me know.

Posted in Architecture, Caching, EDA, ESB, Messaging, Usability | 8 Comments »

Friday, October 9th, 2009

I just finished listening to the Microsoft presentation on how they use the Concurrency & Coordination Runtime (CCR) in MySpace (the stated largest web site running .NET).

Some interesting numbers were stated in the talk.

- Tens of thousands to hundreds of thousands of requests per second

- Over 3 thousand web servers

- Over a thousand mid-tier servers

No wonder most big web sites don’t run .NET. The Windows licenses would put them out of business.

Well, that is if you follow those same architectural practices.

I’ve written in the past of alternative architectural approaches that can scale to those levels at easily an order of magnitude less hardware (I think it’s closer to two OOMs) – here’s one of them on the topic of weather:

Building Super-Scalable Web Systems with REST.

By the way, the client quoted in that post is now well above 60 million users with only small incremental increases in hardware. Oh, and their running everything on Windows and .NET. The question is not “can it scale”, but rather “how much will it cost to scale”.

Architecture pays itself back faster than ever in the Web 2.0 world.

Posted in Architecture, Caching, Performance, Scalability | 13 Comments »

Monday, September 7th, 2009

So, I’ve gotten back from a most enjoyable couple of days in Sweden where I gave two half-day tutorials, the first being the SOA and UI composition talk I gave at the European Virtual ALT.NET meeting (which you can find online here) and the other on DDD in enterprise apps (the first time I’ve done this talk).

I’ve gotten some questions about my DDD presentation there based on Aaron Jensen’s pictures:

Yes – I talk with my hands. All the time.

That slide is quite an important one – I talked about it for at least 2 hours.

Here it is again, this time in full:

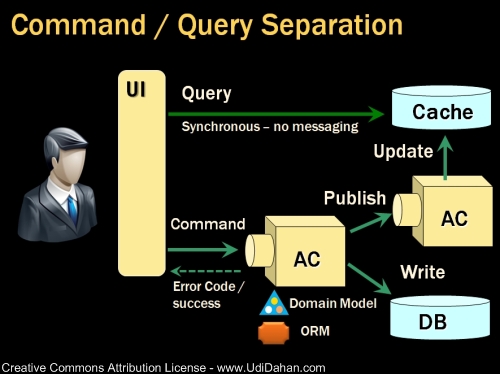

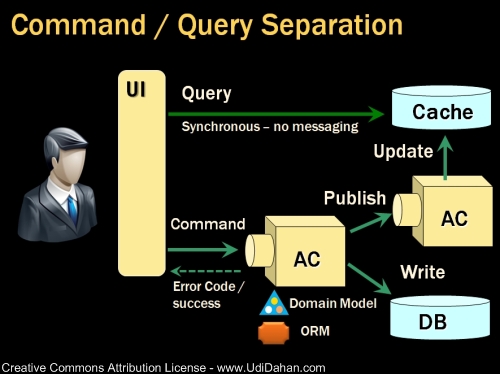

You may notice that the nice clean layered abstraction that the industry has gotten so comfortable with doesn’t quite sit right when looking at it from this perspective. The reason for that is that this perspective takes into account physical distribution while layers don’t.

I’ll have some more posts on this topic as well as giving a session in TechEd Europe this November.

Oh – and please do feel free to already send your questions in.

Posted in Architecture, Business Rules, Caching, Data Access, Databases, DDD, ESB, Messaging, NHibernate, Pub/Sub | 8 Comments »

Monday, December 29th, 2008

I’ve been consulting with a client who has a wildly successful web-based system, with well over 10 million users and looking at a tenfold growth in the near future. One of the recent features in their system was to show users their local weather and it almost maxed out their capacity. That raised certain warning flags as to the ability of their current architecture to scale to the levels that the business was taking them.

On Web 2.0 Mashups

One would think that sites like Weather.com and friends would be the first choice for implementing such a feature. Only thing is that they were strongly against being mashed-up Web 2.0 style on the client – they had enough scalability problems of their own. Interestingly enough (or not), these partners were quite happy to publish their weather data to us and let us handle the whole scalability issue.

Implementation 1.0

The current implementation was fairly straightforward – client issues a regular web service request to the GetWeather webmethod, the server uses the user’s IP address to find out their location, then use that location to find the weather for that location in the database, and return that to the user. Standard fare for most dynamic data and the way most everybody would tell you to do it.

Only thing is that it scales like a dog.

Add Some Caching

The first thing you do when you have scalability problems and the database is the bottleneck is to cache, well, that’s what everybody says (same everybody as above).

The thing is that holding all the weather of the entire globe in memory, well, takes a lot of memory. More than is reasonable. In which case, there’s a fairly decent chance that a given request can’t be served from the cache, resulting in a query to the database, an update to the cache, which bumps out something else, in short, not a very good hit rate.

Not much bang for the buck.

If you have a single datacenter, having a caching tier that stores this data is possible, but costly. If you want a highly available, business continuity supportable, multi-datacenter infrastructure, the costs add up quite a bit quicker – to the point of not being cost effective (“You need HOW much money for weather?! We’ve got dozens more features like that in the pipe!”)

What we can do is to tell the client we’re responding to that they can cache the result, but that isn’t close to being enough for us to scale.

Look at the Data, Leverage the Internet

When you find yourself in this sort of situation, there’s really only one thing to do:

In order to save on bandwidth, the most precious commodity of the internet, the various ISPs and backbone providers cache aggressively. In fact, HTTP is designed exactly for that.

If user A asks for some html page, the various intermediaries between his browser and the server hosting that page will cache that page (based on HTTP headers). When user B asks for that same page, and their request goes through one of the intermediaries that user A’s request went through, that intermediary will serve back its cached copy of the page rather than calling the hosting server.

Also, users located in the same geographic region by and large go through the same intermediaries when calling a remote site.

Leverage the Internet

The internet is the biggest, most scalable data serving infrastructure that mankind was lucky enough to have happen to it. However, in order to leverage it – you need to understand your data and how your users use it, and finally align yourself with the way the internet works.

Let’s say we have 1,000 users in London. All of them are going to have the same weather. If all these users come to our site in the period of a few hours and ask for the weather, they all are going to get the exact same data. The thing is that the response semantics of the GetWeather webmethod must prevent intermediaries from caching so that users in Dublin and Glasgow don’t get London weather (although at times I bet they’d like to).

REST Helps You Leverage the Internet

Rather than thinking of getting the weather as an operation/webmethod, we can represent the various locations weather data as explicit web resources, each with its own URI. Thus, the weather in London would be http://weather.myclient.com/UK/London.

If we were able to make our clients in London perform an HTTP GET on http://weather.myclient.com/UK/London then we could return headers in the HTTP response telling the intermediaries that they can cache the response for an hour, or however long we want.

That way, after the first user in London gets the weather from our servers, all the other 999 users will be getting the same data served to them from one of the intermediaries. Instead of getting hammered by millions of requests a day, the internet would shoulder easily 90% of that load making it much easier to scale. Thanks Al.

This isn’t a “cheap trick”. While being straight forward for something like weather, understanding the nature of your data and intelligently mapping that to a URI space is critical to building a scalable system, and reaping the benefits of REST.

What’s left?

The only thing that’s left is to get the client to know which URI to call. A simple matter, really.

When the user logs in, we perform the IP to location lookup and then write a cookie to the client with their location (UK/London). That cookie then stays with the user saving us from having to perform that IP to location lookup all the time. On subsequent logins, if the cookie is already there, we don’t do the lookup.

BTW, we also show the user “you’re in London, aren’t you?” with the link allowing the user to change their location, which we then update the cookie with and change the URI we get the weather from.

In Closing

While web services are great for getting a system up and running quickly and interoperably, scalability often suffers. Not so much as to be in your face, but after you’ve gone quite a ways and invested a fair amount of development in it, you find it standing between you and the scalability you seek.

Moving to REST is not about turning on the “make it restful” switch in your technology stack (ASP.NET MVC and WCF, I’m talking to you). Just like with databases there is no “make it go fast” switch – you really do need to understand your data, the various users access patterns, and the volatility of the data so that you can map it to the “right” resources and URIs.

If you do walk the RESTful path, you’ll find that the scalability that was once so distant is now within your grasp.

Posted in Architecture, Caching, Performance, REST, Scalability, Web Services | 30 Comments »

Wednesday, October 22nd, 2008

Of the tenets of Service Orientation, the tenet of Autonomy is one that many understand intuitively. Interestingly enough, many in that same intuitive category don’t see pub/sub as a necessity for that autonomy.

Watch that first step

Although sometimes described as the first step of an organization moving to SOA, web-service-izing everything results in synchronous, blocking, request/response interaction between services. The problem being that if one service were to become unavailable, all consumers of that service would not be able to perform any work. With the deep service “call stacks” this architectural style condones, the availability and performance of the entire organization will be dictated by the weakest link.

So, while I’d agree that many organizations do need to take this step, I’d caution against going into production at this step.

Pub/Sub Considered Helpful

When services interact with each other using publish/subscribe semantics we don’t have that technical problem of blocking. Subscribers cache the data published to them (either in memory or durably depending on their fault-tolerance requirements) thus enabling them to function and process requests even if the publisher is unavailable.

Consider the following scenario:

Let’s say we have an e-commerce site, a part of our Sales service responsible for selling products. Another service, let’s call it merchandising, is responsible for the catalog of products, and how much each product costs. Sales is subscribed to price update events published by Merchandising and saves (caches) those prices in its own database. When a customer orders some products on the site, Sales does not need to call Merchandising to get the price of the product and just uses the previously saved (cached) price. Thus, even if Merchandising is unavailable, Sales is able to accept orders. This is a big win as our merchandising application is not nearly as robust as our sales systems.

Yet, there are scenarios where data freshness requirements prevent this.

Too Much of a Good Thing?

Technically, the above story is accurate. There is nothing technically preventing Sales from accepting orders. Yet consider a scenario where Merchandising is down or unavailable for an extended period of time. While this may not be entirely likely for two servers in the same data center, consider physical kiosks which customers can use to buy products. Those kiosks may not receive updates for days. Should they accept orders?

That’s really a question to the business. If pricing data is stale for a time period greater than X, do not sell that item. The value of X may even be different for different kinds of products. Keep in mind that this issue only arose since we architected our services to be fully autonomous. In a synchronous systems architecture, this issue would not come up. As such, it is our responsibility as architects to go digging for these requirements as well as explaining to the business what the tradeoffs are.

In order to have more up to date data, we need to invest in more available hardware, networks, and infrastructure. This needs to be balanced against the predicted increase in revenue that more up to date (read higher) prices would give us.

You Can Get What You Pay For

Beyond the additional cost of writing that additional logic, and the perceived increased complexity, another difference to note between this architectural style and the synchronous/traditional one is that it puts control of spending back in the hands of business.

In a synchronous architecture, in order to achieve required performance and availability, all systems need to be performant requiring across the board investments in servers, networks, and storage. Without investing everywhere, the weakest link is liable to undo all other investments. In other words, your developers have made your investment choices for you. Scary, isn’t it.

A more prudent investment strategy would prefer spending on services that give the biggest bang for the buck, better known as return on investment. A pub/sub based architecture allows investing in data-freshness where it makes the most sense. For example, in sales of high profit products to strategic customers rather than inventory management of raw materials for products slated to be decommissioned.

That sounds a lot like IT-Business Alignment.

Maybe there’s something to this SOA thing after all…

Read more about:

7 Questions for Service Selection

7 Questions around data freshness

Event-Driven Architecture and Legacy Applications

Autonomous Services and Enterprise Entity Aggregation

Or listen to a podcast describing Business Components, the connection of pub/sub and SOA.

Posted in Autonomous Services, Caching, EDA, ESB, Pub/Sub, SOA | 11 Comments »

Thursday, June 19th, 2008

For those people who couldn’t come to TechEd USA and didn’t see my talks on how to build highly scalable web architectures, you’re in luck – Craig, the man behind the Polymorphic Podcast sat down with me and we chatted about what the problems, common solutions, and effective tactics there are in this space. For those of you who were at TechEd and still didn’t come to my talk – what were you thinking?!

🙂

Check it out.

Some of this stuff is a bit counter-intuitive (and not readily supported by the tools available in Visual Studio) so please, do feel free to ask questions (in the comments below).

Posted in Architecture, Caching, Messaging, Pub/Sub, Scalability, Web Services | No Comments »

Saturday, November 10th, 2007

Often during my consulting engagements I run into people who say, "some things just can’t be made asynchronous" even after they agree about the inherent scalability that asynchronous communications pattern bring. One often-cited example is user authentication – taking a username and password combo and authenticating it against some back-end store. For the purpose of this post, I’m going to assume a database. Also, I’m not going to be showing more advanced features like ETags to further improve the solution.

The Setup

Just so that the example is in itself secure, we’ll assume that the password is one-way hashed before being stored. Also, given a reasonable network infrastructure our web servers will be isolated in the DMZ and will have to access some application server which, in turn, will communicate with the DB. There’s also a good chance for something like round-robin load-balancing between web servers, especially for things like user login.

Before diving into the meat of it, I wanted to preface with a few words. One of the commonalities I’ve found when people dismiss asynchrony is that they don’t consider a real deployment environment, or scaling up a solution to multiple servers, farms, or datacenters.

The Synchronous Solution

In the synchronous solution, each one of our web servers will be contacting the app server for each user login request. In other words, the load on the app server and, consequently, on the database server will be proportional to the number of logins. One property of this load is its data locality, or rather, the lack of it. Given that user U logged in, the DB won’t necessarily gain any performance benefits by loading all username/password data into memory for the same page as user U. Another property is that this data is very non-volatile – it doesn’t change that often.

I won’t go to far into the synchronous solution since its been analysed numerous times before. The bottom line is that the database is the bottleneck. You could use sharding solutions. Many of the large sites have numerous read-only databases for this kind of data, with one master for updates – replicating out to the read-only replicas. That’s great if you’re using a nice cheap database like mySql (of LAMP), not so nice if you’re running Oracle or MS Sql Server.

Regardless of what you’re doing in your data tier, you’re there. Wouldn’t it be nice to close the loop in the web servers? Even if you are using Apache, that’s going to be less iron, electricity, and cooling all around. That’s what the asynchronous solution is all about – capitalizing on the low cost of memory to save on other things.

The Asynchronous Solution

In the asynchronous solution, we cache username/hashed-password pairs in memory on our web servers, and authenticate against that. Let’s analyse how much memory that takes:

Usernames are usually 12 characters or less, but let’s take an average of 32 to be sure. Using Unicode we get to 64 bytes for the username. Hashed passwords can run between 256 and 512 bits depending on the algorithm, divide by 8 and you have 64 bytes. That’s about 128 bytes altogether. So we can safely cache 8 million of these with 1GB of memory per web server. If you’ve got a million users, first of all, good for you 🙂 Second, that’s just 128 MB of memory – relatively nothing even for a cheap 2GB web server.

Also, consider the fact that when registering a new user we can check if such a username is already taken at the web server level. That doesn’t mean it won’t be checked again in the DB to account for concurrency issues, but that the load on the DB is further reduced. Other things to notice include no read-only replicas and no replication. Simple. Our web servers are the "replicas".

The Authentication Service

What makes it all work is the "Authentication Service" on the app server. This was always there in the synchronous solution. It is what used to field all the login requests from the web servers, and, of course, allowed them to register new users and all the regular stuff. The difference is that now it publishes a message when a new user is registered (or rather, is validated – all a part of the internal long-running workflow). It also allows subscribers to receive the list of all username/hashed-password pairs. It’s also quite likely that it would keep the same data in memory too.

The same message can be used to publish both single updates, and returning the full list when using NServiceBus. Let’s define the message:

[Serializable]

public class UsernameInUseMessage : IMessage

{

private string username;

public string Username

{

get { return username; }

set { username = value; }

}

private byte[] hashedPassword;

public byte[] HashedPassword

{

get { return hashedPassword; }

set { hashedPassword = value; }

}

}

And the message that the web server sends when it wants the full list:

[Serializable]

public class GetAllUsernamesMessage : IMessage

{

}

And the code that the web server runs on startup looks like this (assuming constructor injection):

public class UserAuthenticationServiceAgent

{

public UserAuthenticationServiceAgent(IBus bus)

{

this.bus = bus;

bus.Subscribe(typeof(UsernameInUseMessage));

bus.Send(new GetAllUsernamesMessages());

}

}

And the code that runs in the Authentication Service when the GetAllUsernamesMessage is received:

public class GetAllUsernamesMessageHandler : BaseMessageHandler<GetAllUsernamesMessage>

{

public override void Handle(GetAllUsernamesMessage message)

{

this.Bus.Reply(Cache.GetAll<UsernameInUseMessage>());

}

}

And the class on the web server that handles a UsernameInUseMessage when it arrives:

public class UsernameInUseMessageHandler : BaseMessageHandler<UsernameInUseMessage>

{

public override void Handle(UsernameInUseMessage message)

{

WebCache.SaveOrUpdate(message.Username, message.HashedPassword);

}

}

When the app server sends the full list, multiple objects of the type UsernameInUseMessage are sent in one physical message to that web server. However, the bus object that runs on the web server dispatches each of these logical messages one at a time to the message handler above.

So, when it comes time to actually authenticate a user, this the web page (or controller, if you’re doing MVC) would call:

public class UserAuthenticationServiceAgent

{

public bool Authenticate(string username, string password)

{

byte[] existingHashedPassword = WebCache[username];

if (existingHashedPassword != null)

return existingHashedPassword == this.Hash(password);

return false;

}

}

When registering a new user, the web server would of course first check its cache, and then send a RegisterUserMessage that contained the username and the hashed password.

[Serializable]

[StartsWorkflow]

public class RegisterUserMessage : IMessage

{

private string username;

public string Username

{

get { return username; }

set { username = value; }

}

private string email;

public string Email

{

get { return email; }

set { email = value; }

}

private byte[] hashedPassword;

public byte[] HashedPassword

{

get { return hashedPassword; }

set { hashedPassword = value; }

}

}

When the RegisterUserMessage arrives at the app server, a new long-running workflow is kicked off to handle the process:

public class RegisterUserWorkflow :

BaseWorkflow<RegisterUserMessage>, IMessageHandler<UserValidatedMessage>

{

public void Handle(RegisterUserMessage message)

{

//send validation request to message.Email containing this.Id (a guid)

// as a part of the URL

}

/// <summary>

/// When a user clicks the validation link in the email, the web server

/// sends this message (containing the workflow Id)

/// </summary>

/// <param name="message"></param>

public void Handle(UserValidatedMessage message)

{

// write user to the DB

this.Bus.Publish(new UsernameInUseMessage(

message.Username, message.HashedPassword));

}

}

That UsernameInUseMessage would eventually arrive at all the web servers subscribed.

Performance/Security Trade-Offs

When looking deeper into this workflow we realize that it could be implemented as two separate message handlers, and have the email address take the place of the workflow Id. The problem with this alternate, better performing solution has to do with security. By removing the dependence on the workflow Id, we’ve in essence stated that we’re willing to receive a UserValidatedMessage without having previously received the RegisterUserMessage.

Since the processing of the UserValidatedMessage is relatively expensive – writing to the DB and publishing messages to all web servers, a malicious user could perform a denial of service (DOS) attack without that many messages, thus flying under the radar of many detection systems. Spoofing a guid that would result in a valid workflow instance is much more difficult. Also, since workflow instances would probably be stored in some in-memory, replicated data grid the relative cost of a lookup would be quite small – small enough to avoid a DOS until a detection system picked it up.

Improved Bandwidth & Latency

The bottom line is that you’re getting much more out of your web tier this way, rather than hammering your data tier and having to scale it out much sooner. Also, notice that there is much less network traffic this way. Not such a big deal for usernames and passwords, but other scenarios built in the same way may need more data. Of course, the time it takes us to log a user in is much shorter as well since we don’t have to cross back and forth from the web server (in the DMZ) to the app server, to the db server.

The important thing to remember in this solution is doing pub/sub. NServiceBus merely provides a simple API for designing the system around pub/sub. And publishing is where you get the serious scalability. As you get more users, you’ll obviously need to get more web servers. The thing is that you probably won’t need more database servers just to handle logins. In this case, you also get lower latency per request since all work needed to be done can be done locally on the server that received the request.

ETags make it even better

For the more advanced crowd, I’ll wrap it up with the ETags. Since web servers do go down, and the cache will be cleared, what we can do is to write that cache to disk (probably in a background thread), and "tag" it with something that the server gave us along with the last UsernameInUseMessage we received. That way, when the web server comes back up, it can send that ETag along with its GetAllUsernamesMessage so that the app server will only send the changes that occurred since. This drives down network usage even more at the insignificant cost of some disk space on the web servers.

And in closing…

Even if you don’t have anything more than a single physical server today, and it acts as your web server and database server, this solution won’t slow things down. If anything, it’ll speed it up. Regardless, you’re much better prepared to scale out than before – no need to rip and replace your entire architecture just as you get 8 million Facebook users banging down your front door.

So, go check out NServiceBus and get the most out of your iron.

Posted in Architecture, Autonomous Services, Availability, Caching, Data Access, Databases, Development, ESB, NServiceBus, Performance, Pub/Sub, Scalability, Security, SOA, Web Services, Workflow | 13 Comments »

Thursday, October 4th, 2007

On one of the projects I’m consulting on they needed some special behavior to handle the following scenario:

Since the service needs to perform all request processing in near-real-time, it caches all data from the DB in memory (yes, that’s a lot of memory). Since the service needs to handle multiple requests concurrently, we’re using multiple threads (so far, so good). The problem is that we don’t want the service to handle messages received until it’s finished caching everything. Also, we don’t want that check to show up in every message handler (important when you have lots of message types).

This is actually quite easy to do with NServiceBus. Here’s how:

Have a thread-safe class, let’s call it Loader, for the API to the caching. Something along the lines of:

If (!Loader.HasCachedEverything)

Loader.CacheEverything();

Obviously, the Loader will have internal logic for checking if it has already started loading things from the DB, so that it won’t do the same thing twice.

OK, now on to the interesting stuff.

We’d like to have the above code run no matter which kind of message we’ve received, so we just write a “generic” message handler – which handles “IMessage” like so:

public class CachingMessageHandler : BaseMessageHandler<IMessage>

{

public void Handle(IMessage message)

{

If (!Loader.HasCachedEverything)

{

Loader.CacheEverything();

this.bus.HandleCurrentMessageLater();

this.CanContinue = false;

}

}

}

When the message handler calls “HandleCurrentMessageLater”, the bus puts the current message in the back of the queue. If you’ve configured a transactional transport, this will be safe even in the case of a server restart.

Also, notice the “CanContinue = false”. This tells the bus that the message should not be passed on to any other message handlers, even if there are those that are configured to handle it.

We’ll also package this class up by itself, keeping it separate from the core logic of the service – making it easier to version these cross cutting concerns and the service logic. Let’s put it in “CrossCuttingConcerns.dll”

The final thing needed in order to achieve the behavior described above is to configure this message handler to run before any other handler. This is done in the config file of the process, under the “bus” object, in the “MessageHandlerAssemblies” property like so:

<property name="MessageHandlerAssemblies">

<list>

<value>CrossCuttingConcerns</value>

<value>ServiceLogic</value>

</list>

</property>

This is similar to the way HttpHandlers are (were?) configured in IIS – the order of the handlers defines the order in which the bus dispatches messages to them.

And that’s it.

We’re done.

If you have any questions you’d like to ask about NServiceBus, please feel free to send them my way: Questions@NServiceBus.com.

And just in closing I’d like to say that I don’t necessarily think you should be creating stateful services, but that there’s a time and place for everything.

Posted in Architecture, Autonomous Services, Caching, EDA, ESB, NServiceBus, SOA | No Comments »

|

|

|

Recommendations

Bryan Wheeler, Director Platform Development at msnbc.com

“ Udi Dahan is the real deal.We brought him on site to give our development staff the 5-day “Advanced Distributed System Design” training. The course profoundly changed our understanding and approach to SOA and distributed systems. Consider some of the evidence: 1. Months later, developers still make allusions to concepts learned in the course nearly every day 2. One of our developers went home and made her husband (a developer at another company) sign up for the course at a subsequent date/venue 3. Based on what we learned, we’ve made constant improvements to our architecture that have helped us to adapt to our ever changing business domain at scale and speed If you have the opportunity to receive the training, you will make a substantial paradigm shift. If I were to do the whole thing over again, I’d start the week by playing the clip from the Matrix where Morpheus offers Neo the choice between the red and blue pills. Once you make the intellectual leap, you’ll never look at distributed systems the same way. Beyond the training, we were able to spend some time with Udi discussing issues unique to our business domain. Because Udi is a rare combination of a big picture thinker and a low level doer, he can quickly hone in on various issues and quickly make good (if not startling) recommendations to help solve tough technical issues.” November 11, 2010

Sam Gentile, Independent WCF & SOA Expert

Sam Gentile, Independent WCF & SOA Expert

“Udi, one of the great minds in this area. A man I respect immensely.”

Ian Robinson, Principal Consultant at ThoughtWorks

Ian Robinson, Principal Consultant at ThoughtWorks

"Your blog and articles have been enormously useful in shaping, testing and refining my own approach to delivering on SOA initiatives over the last few years. Over and against a certain 3-layer-application-architecture-blown-out-to- distributed-proportions school of SOA, your writing, steers a far more valuable course."

Shy Cohen, Senior Program Manager at Microsoft

Shy Cohen, Senior Program Manager at Microsoft

“Udi is a world renowned software architect and speaker. I met Udi at a conference that we were both speaking at, and immediately recognized his keen insight and razor-sharp intellect. Our shared passion for SOA and the advancement of its practice launched a discussion that lasted into the small hours of the night. It was evident through that discussion that Udi is one of the most knowledgeable people in the SOA space. It was also clear why – Udi does not settle for mediocrity, and seeks to fully understand (or define) the logic and principles behind things. Humble yet uncompromising, Udi is a pleasure to interact with.”

Glenn Block, Senior Program Manager - WCF at Microsoft

Glenn Block, Senior Program Manager - WCF at Microsoft

“I have known Udi for many years having attended his workshops and having several personal interactions including working with him when we were building our Composite Application Guidance in patterns & practices. What impresses me about Udi is his deep insight into how to address business problems through sound architecture. Backed by many years of building mission critical real world distributed systems it is no wonder that Udi is the best at what he does. When customers have deep issues with their system design, I point them Udi's way.”

Karl Wannenmacher, Senior Lead Expert at Frequentis AG

Karl Wannenmacher, Senior Lead Expert at Frequentis AG

“I have been following Udi’s blog and podcasts since 2007. I’m convinced that he is one of the most knowledgeable and experienced people in the field of SOA, EDA and large scale systems.

Udi helped Frequentis to design a major subsystem of a large mission critical system with a nationwide deployment based on NServiceBus. It was impressive to see how he took the initial architecture and turned it upside down leading to a very flexible and scalable yet simple system without knowing the details of the business domain.

I highly recommend consulting with Udi when it comes to large scale mission critical systems in any domain.”

Simon Segal, Independent Consultant

Simon Segal, Independent Consultant

“Udi is one of the outstanding software development minds in the world today, his vast insights into Service Oriented Architectures and Smart Clients in particular are indeed a rare commodity. Udi is also an exceptional teacher and can help lead teams to fall into the pit of success. I would recommend Udi to anyone considering some Architecural guidance and support in their next project.”

Ohad Israeli, Chief Architect at Hewlett-Packard, Indigo Division

Ohad Israeli, Chief Architect at Hewlett-Packard, Indigo Division

“When you need a man to do the job Udi is your man! No matter if you are facing near deadline deadlock or at the early stages of your development, if you have a problem Udi is the one who will probably be able to solve it, with his large experience at the industry and his widely horizons of thinking , he is always full of just in place great architectural ideas.

I am honored to have Udi as a colleague and a friend (plus having his cell phone on my speed dial).”

Ward Bell, VP Product Development at IdeaBlade

Ward Bell, VP Product Development at IdeaBlade

“Everyone will tell you how smart and knowledgable Udi is ... and they are oh-so-right. Let me add that Udi is a smart LISTENER. He's always calibrating what he has to offer with your needs and your experience ... looking for the fit. He has strongly held views ... and the ability to temper them with the nuances of the situation. I trust Udi to tell me what I need to hear, even if I don't want to hear it, ... in a way that I can hear it. That's a rare skill to go along with his command and intelligence.”

Eli Brin, Program Manager at RISCO Group

“We hired Udi as a SOA specialist for a large scale project. The development is outsourced to India. SOA is a buzzword used almost for anything today. We wanted to understand what SOA really is, and what is the meaning and practice to develop a SOA based system.

We identified Udi as the one that can put some sense and order in our minds. We started with a private customized SOA training for the entire team in Israel. After that I had several focused sessions regarding our architecture and design.

I will summarize it simply (as he is the software simplist): We are very happy to have Udi in our project. It has a great benefit. We feel good and assured with the knowledge and practice he brings. He doesn’t talk over our heads. We assimilated nServicebus as the ESB of the project. I highly recommend you to bring Udi into your project.”

Catherine Hole, Senior Project Manager at the Norwegian Health Network

Catherine Hole, Senior Project Manager at the Norwegian Health Network

“My colleagues and I have spent five interesting days with Udi - diving into the many aspects of SOA. Udi has shown impressive abilities of understanding organizational challenges, and has brought the business perspective into our way of looking at services. He has an excellent understanding of the many layers from business at the top to the technical infrstructure at the bottom. He is a great listener, and manages to simplify challenges in a way that is understandable both for developers and CEOs, and all the specialists in between.”

Yoel Arnon, MSMQ Expert

Yoel Arnon, MSMQ Expert

“Udi has a unique, in depth understanding of service oriented architecture and how it should be used in the real world, combined with excellent presentation skills. I think Udi should be a premier choice for a consultant or architect of distributed systems.”

Vadim Mesonzhnik, Development Project Lead at Polycom

“When we were faced with a task of creating a high performance server for a video-tele conferencing domain we decided to opt for a stateless cluster with SQL server approach. In order to confirm our decision we invited Udi.

After carefully listening for 2 hours he said: "With your kind of high availability and performance requirements you don’t want to go with stateless architecture."

One simple sentence saved us from implementing a wrong product and finding that out after years of development. No matter whether our former decisions were confirmed or altered, it gave us great confidence to move forward relying on the experience, industry best-practices and time-proven techniques that Udi shared with us.

It was a distinct pleasure and a unique opportunity to learn from someone who is among the best at what he does.”

Jack Van Hoof, Enterprise Integration Architect at Dutch Railways

Jack Van Hoof, Enterprise Integration Architect at Dutch Railways

“Udi is a respected visionary on SOA and EDA, whose opinion I most of the time (if not always) highly agree with. The nice thing about Udi is that he is able to explain architectural concepts in terms of practical code-level examples.”

Neil Robbins, Applications Architect at Brit Insurance

Neil Robbins, Applications Architect at Brit Insurance

“Having followed Udi's blog and other writings for a number of years I attended Udi's two day course on 'Loosely Coupled Messaging with NServiceBus' at SkillsMatter, London.

I would strongly recommend this course to anyone with an interest in how to develop IT systems which provide immediate and future fitness for purpose. An influential and innovative thought leader and practitioner in his field, Udi demonstrates and shares a phenomenally in depth knowledge that proves his position as one of the premier experts in his field globally.

The course has enhanced my knowledge and skills in ways that I am able to immediately apply to provide benefits to my employer. Additionally though I will be able to build upon what I learned in my 2 days with Udi and have no doubt that it will only enhance my future career.

I cannot recommend Udi, and his courses, highly enough.”

Nick Malik, Enterprise Architect at Microsoft Corporation

Nick Malik, Enterprise Architect at Microsoft Corporation

“ You are an excellent speaker and trainer, Udi, and I've had the fortunate experience of having attended one of your presentations. I believe that you are a knowledgable and intelligent man.”

Sean Farmar, Chief Technical Architect at Candidate Manager Ltd

Sean Farmar, Chief Technical Architect at Candidate Manager Ltd

“Udi has provided us with guidance in system architecture and supports our implementation of NServiceBus in our core business application.

He accompanied us in all stages of our development cycle and helped us put vision into real life distributed scalable software. He brought fresh thinking, great in depth of understanding software, and ongoing support that proved as valuable and cost effective.

Udi has the unique ability to analyze the business problem and come up with a simple and elegant solution for the code and the business alike. With Udi's attention to details, and knowledge we avoided pit falls that would cost us dearly.”

Børge Hansen, Architect Advisor at Microsoft

Børge Hansen, Architect Advisor at Microsoft

“Udi delivered a 5 hour long workshop on SOA for aspiring architects in Norway. While keeping everyone awake and excited Udi gave us some great insights and really delivered on making complex software challenges simple. Truly the software simplist.”

Motty Cohen, SW Manager at KorenTec Technologies

“I know Udi very well from our mutual work at KorenTec. During the analysis and design of a complex, distributed C4I system - where the basic concepts of NServiceBus start to emerge - I gained a lot of "Udi's hours" so I can surely say that he is a professional, skilled architect with fresh ideas and unique perspective for solving complex architecture challenges. His ideas, concepts and parts of the artifacts are the basis of several state-of-the-art C4I systems that I was involved in their architecture design.”

Aaron Jensen, VP of Engineering at Eleutian Technology

Aaron Jensen, VP of Engineering at Eleutian Technology

“ Awesome. Just awesome.

We’d been meaning to delve into messaging at Eleutian after multiple discussions with and blog posts from Greg Young and Udi Dahan in the past. We weren’t entirely sure where to start, how to start, what tools to use, how to use them, etc. Being able to sit in a room with Udi for an entire week while he described exactly how, why and what he does to tackle a massive enterprise system was invaluable to say the least.

We now have a much better direction and, more importantly, have the confidence we need to start introducing these powerful concepts into production at Eleutian.”

Gad Rosenthal, Department Manager at Retalix

Gad Rosenthal, Department Manager at Retalix

“A thinking person. Brought fresh and valuable ideas that helped us in architecting our product. When recommending a solution he supports it with evidence and detail so you can successfully act based on it. Udi's support "comes on all levels" - As the solution architect through to the detailed class design. Trustworthy!”

Chris Bilson, Developer at Russell Investment Group

Chris Bilson, Developer at Russell Investment Group

“I had the pleasure of attending a workshop Udi led at the Seattle ALT.NET conference in February 2009. I have been reading Udi's articles and listening to his podcasts for a long time and have always looked to him as a source of advice on software architecture. When I actually met him and talked to him I was even more impressed. Not only is Udi an extremely likable person, he's got that rare gift of being able to explain complex concepts and ideas in a way that is easy to understand. All the attendees of the workshop greatly appreciate the time he spent with us and the amazing insights into service oriented architecture he shared with us.”

Alexey Shestialtynov, Senior .Net Developer at Candidate Manager

Alexey Shestialtynov, Senior .Net Developer at Candidate Manager

“I met Udi at Candidate Manager where he was brought in part-time as a consultant to help the company make its flagship product more scalable. For me, even after 30 years in software development, working with Udi was a great learning experience. I simply love his fresh ideas and architecture insights. As we all know it is not enough to be armed with best tools and technologies to be successful in software - there is still human factor involved. When, as it happens, the project got in trouble, management asked Udi to step into a leadership role and bring it back on track. This he did in the span of a month. I can only wish that things had been done this way from the very beginning. I look forward to working with Udi again in the future.”

Christopher Bennage, President at Blue Spire Consulting, Inc.

Christopher Bennage, President at Blue Spire Consulting, Inc.

“My company was hired to be the primary development team for a large scale and highly distributed application. Since these are not necessarily everyday requirements, we wanted to bring in some additional expertise. We chose Udi because of his blogging, podcasting, and speaking. We asked him to to review our architectural strategy as well as the overall viability of project.

I was very impressed, as Udi demonstrated a broad understanding of the sorts of problems we would face. His advice was honest and unbiased and very pragmatic. Whenever I questioned him on particular points, he was able to backup his opinion with real life examples.

I was also impressed with his clarity and precision. He was very careful to untangle the meaning of words that might be overloaded or otherwise confusing. While Udi's hourly rate may not be the cheapest, the ROI is undoubtedly a deal.

I would highly recommend consulting with Udi.”

Robert Lewkovich, Product / Development Manager at Eggs Overnight

“Udi's advice and consulting were a huge time saver for the project I'm responsible for. The $ spent were well worth it and provided me with a more complete understanding of nServiceBus and most importantly in helping make the correct architectural decisions earlier thereby reducing later, and more expensive, rework.”

Ray Houston, Director of Development at TOPAZ Technologies

Ray Houston, Director of Development at TOPAZ Technologies

“Udi's SOA class made me smart - it was awesome.

The class was very well put together. The materials were clear and concise and Udi did a fantastic job presenting it. It was a good mixture of lecture, coding, and question and answer. I fully expected that I would be taking notes like crazy, but it was so well laid out that the only thing I wrote down the entire course was what I wanted for lunch. Udi provided us with all the lecture materials and everyone has access to all of the samples which are in the nServiceBus trunk.

Now I know why Udi is the "Software Simplist." I was amazed to find that all the code and solutions were indeed very simple. The patterns that Udi presented keep things simple by isolating complexity so that it doesn't creep into your day to day code. The domain code looks the same if it's running in a single process or if it's running in 100 processes.”

Ian Cooper, Team Lead at Beazley

Ian Cooper, Team Lead at Beazley

“Udi is one of the leaders in the .Net development community, one of the truly smart guys who do not just get best architectural practice well enough to educate others but drives innovation. Udi consistently challenges my thinking in ways that make me better at what I do.”

Liron Levy, Team Leader at Rafael

“I've met Udi when I worked as a team leader in Rafael. One of the most senior managers there knew Udi because he was doing superb architecture job in another Rafael project and he recommended bringing him on board to help the project I was leading. Udi brought with him fresh solutions and invaluable deep architecture insights. He is an authority on SOA (service oriented architecture) and this was a tremendous help in our project. On the personal level - Udi is a great communicator and can persuade even the most difficult audiences (I was part of such an audience myself..) by bringing sound explanations that draw on his extensive knowledge in the software business. Working with Udi was a great learning experience for me, and I'll be happy to work with him again in the future.”

Adam Dymitruk, Director of IT at Apara Systems

Adam Dymitruk, Director of IT at Apara Systems

“I met Udi for the first time at DevTeach in Montreal back in early 2007. While Udi is usually involved in SOA subjects, his knowledge spans all of a software development company's concerns. I would not hesitate to recommend Udi for any company that needs excellent leadership, mentoring, problem solving, application of patterns, implementation of methodologies and straight out solution development. There are very few people in the world that are as dedicated to their craft as Udi is to his. At ALT.NET Seattle, Udi explained many core ideas about SOA. The team that I brought with me found his workshop and other talks the highlight of the event and provided the most value to us and our organization. I am thrilled to have the opportunity to recommend him.”

Eytan Michaeli, CTO Korentec

Eytan Michaeli, CTO Korentec

“Udi was responsible for a major project in the company, and as a chief architect designed a complex multi server C4I system with many innovations and excellent performance.”

Carl Kenne, .Net Consultant at Dotway AB

Carl Kenne, .Net Consultant at Dotway AB

“Udi's session "DDD in Enterprise apps" was truly an eye opener. Udi has a great ability to explain complex enterprise designs in a very comprehensive and inspiring way. I've seen several sessions on both DDD and SOA in the past, but Udi puts it in a completly new perspective and makes us understand what it's all really about. If you ever have a chance to see any of Udi's sessions in the future, take it!”

Avi Nehama, R&D Project Manager at Retalix

“Not only that Udi is a briliant software architecture consultant, he also has remarkable abilities to present complex ideas in a simple and concise manner, and...

always with a smile. Udi is indeed a top-league professional!”

Ben Scheirman, Lead Developer at CenterPoint Energy

Ben Scheirman, Lead Developer at CenterPoint Energy

“Udi is one of those rare people who not only deeply understands SOA and domain driven design, but also eloquently conveys that in an easy to grasp way. He is patient, polite, and easy to talk to. I'm extremely glad I came to his workshop on SOA.”

Scott C. Reynolds, Director of Software Engineering at CBLPath

Scott C. Reynolds, Director of Software Engineering at CBLPath

“Udi is consistently advancing the state of thought in software architecture, service orientation, and domain modeling.

His mastery of the technologies and techniques is second to none, but he pairs that with a singular ability to listen and communicate effectively with all parties, technical and non, to help people arrive at context-appropriate solutions.

Every time I have worked with Udi, or attended a talk of his, or just had a conversation with him I have come away from it enriched with new understanding about the ideas discussed.”

Evgeny-Hen Osipow, Head of R&D at PCLine

“Udi has helped PCLine on projects by implementing architectural blueprints demonstrating the value of simple design and code.”

Rhys Campbell, Owner at Artemis West

Rhys Campbell, Owner at Artemis West

“For many years I have been following the works of Udi. His explanation of often complex design and architectural concepts are so cleanly broken down that even the most junior of architects can begin to understand these concepts. These concepts however tend to typify the "real world" problems we face daily so even the most experienced software expert will find himself in an "Aha!" moment when following Udi teachings.

It was a pleasure to finally meet Udi in Seattle Alt.Net OpenSpaces 2008, where I was pleasantly surprised at how down-to-earth and approachable he was. His depth and breadth of software knowledge also became apparent when discussion with his peers quickly dove deep in to the problems we current face. If given the opportunity to work with or recommend Udi I would quickly take that chance. When I think .Net Architecture, I think Udi.”

Sverre Hundeide, Senior Consultant at Objectware

Sverre Hundeide, Senior Consultant at Objectware

“Udi had been hired to present the third LEAP master class in Oslo. He is an well known international expert on enterprise software architecture and design, and is the author of the open source messaging framework nServiceBus.

The entire class was based on discussion and interaction with the audience, and the only Power Point slide used was the one showing the agenda.

He started out with sketching a naive traditional n-tier application (big ball of mud), and based on suggestions from the audience we explored different solutions which might improve the solution. Whatever suggestions we threw at him, he always had a thoroughly considered answer describing pros and cons with the suggested solution. He obviously has a lot of experience with real world enterprise SOA applications.”

Raphaël Wouters, Owner/Managing Partner at Medinternals

Raphaël Wouters, Owner/Managing Partner at Medinternals

“I attended Udi's excellent course 'Advanced Distributed System Design with SOA and DDD' at Skillsmatter. Few people can truly claim such a high skill and expertise level, present it using a pragmatic, concrete no-nonsense approach and still stay reachable.”

Nimrod Peleg, Lab Engineer at Technion IIT

Nimrod Peleg, Lab Engineer at Technion IIT

“One of the best programmers and software engineer I've ever met, creative, knows how to design and implemet, very collaborative and finally - the applications he designed implemeted work for many years without any problems!”

Jose Manuel Beas